Datacentres Latest News

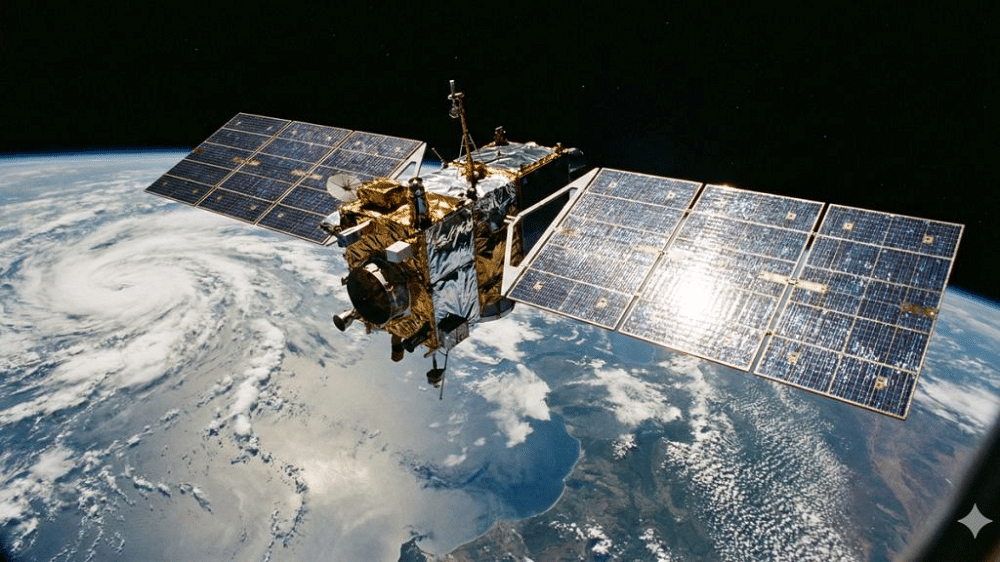

- Technology firms and space agencies are exploring space-based datacentres to address the rapidly rising energy demand of artificial intelligence workloads.

Rising Energy Demand from Artificial Intelligence

- Datacentres are becoming one of the fastest-growing consumers of electricity worldwide, and artificial intelligence is significantly accelerating this trend.

- Modern AI systems rely on large clusters of graphics processing units (GPUs) and specialised accelerators to train and deploy machine learning models.

- These systems require continuous, high-density computing, leading to enormous power consumption.

- Unlike traditional datacentres that primarily support content delivery and cloud services, AI datacentres consume large amounts of energy internally.

- High-speed data exchange between servers within the same facility or across nearby facilities is essential for training large language models.

- As the adoption of generative AI expands across sectors, concerns over sustainability, carbon emissions, and grid stress are becoming increasingly prominent.

Concept of Space-Based Datacentres

- To address these challenges, researchers are exploring the idea of placing datacentres in low-Earth orbit.

- The central idea is to power datacentres entirely using solar energy available in space, where sunlight is uninterrupted and more intense than on Earth.

- This approach aims to bypass terrestrial constraints such as land availability, cooling limitations, and dependence on fossil fuel-based electricity grids.

- Google Research’s Project Suncatcher proposes deploying clusters of satellites equipped with computing hardware that can process AI workloads in space.

- These satellites would operate in carefully choreographed orbits that maintain constant exposure to sunlight, ensuring an uninterrupted power supply through solar panels.

Technical Architecture and Design Principles

- A key feature of orbital datacentres is their reliance on dense inter-satellite communication rather than high-speed connections with Earth.

- AI workloads require extremely high internal bandwidth to allow different processors to work in parallel.

- In space-based systems, this would be achieved through closely spaced satellites communicating with one another using advanced multiplexing and high-frequency links.

- Since most data movement occurs within the system itself, the bandwidth required to communicate with ground stations is relatively modest.

- This mirrors terrestrial AI systems, where user queries require limited bandwidth compared to internal data transfers during model training.

Engineering Challenges in Space Deployment

- Despite the conceptual promise, several technical challenges remain. One major concern is exposure to solar and cosmic radiation.

- Long-term radiation can degrade semiconductor components, affecting performance and reliability.

- Initial tests conducted by Google indicate that some specialised AI chips can tolerate higher radiation levels than expected, but long-duration missions still pose risks.

- Thermal management presents another major challenge. On Earth, datacentres rely on air or liquid cooling systems.

- In space, where there is no atmosphere, dissipating heat becomes significantly more complex.

- Datacentres in orbit would continuously absorb solar radiation while lacking conventional cooling mechanisms, requiring advanced heat dissipation technologies.

- Maintenance is also a critical issue. Unlike terrestrial facilities, repairing or replacing faulty hardware in space is expensive and logistically difficult.

- This raises concerns about system resilience and long-term operational reliability.

Economic Viability and Cost Considerations

- The economic feasibility of space-based datacentres depends heavily on launch costs and hardware durability.

- Currently, launching equipment into orbit is expensive, but projections suggest that satellite launch costs may decline substantially in the coming decades.

- Google estimates that costs could fall to around $200 per kilogram by the mid-2030s, potentially improving the commercial viability of orbital datacentres.

- However, space-based solutions must remain competitive with rapidly advancing ground-based technologies.

- Improvements in renewable energy integration, cooling efficiency, and energy storage on Earth could reduce the relative advantage of orbital systems.

- Past experiments, such as underwater datacentres, demonstrated technical promise but were eventually discontinued due to economic constraints.

India’s Interest in Space-Based Datacentres

- India is also showing interest in this emerging domain.

- The Indian Space Research Organisation (ISRO) is reportedly studying space-based data centre technologies as part of broader efforts to explore commercial and strategic uses of space infrastructure.

- Given India’s growing AI ecosystem and renewable energy ambitions, space-based computing could become a long-term area of research and collaboration.

- This aligns with India’s broader push towards leveraging space technology for civilian, scientific, and commercial applications, while also addressing sustainability challenges associated with digital expansion.

Source: TH

Datacentres FAQs

Q1: Why are datacentres consuming more energy today?

Ans: AI workloads require dense, continuous computing using GPUs, significantly increasing electricity consumption.

Q2: What is a space-based datacentre?

Ans: It is a computing facility placed in orbit that uses solar energy to run AI workloads.

Q3: Why is internal bandwidth more important than ground connectivity for AI datacentres?

Ans: AI systems require extremely high-speed data exchange within the computing infrastructure itself.

Q4: What are the major challenges of datacentres in space?

Ans: Radiation exposure, thermal management, maintenance, and high launch costs are key challenges.

Q5: Is India exploring space-based datacentres?

Ans: Yes, ISRO is reportedly studying the feasibility of space-based datacentre technology.